Tech Thoughts Newsletter – 17 November 2023.

Market: It was all about inflation again this week, with better CPI numbers driving the market higher early in the week. Worth noting Walmart’s comments on its earnings call, as the biggest retailer in the world: “In the US, we may be managing through a period of deflation in the months to come. And while that would put more unit pressure on us, we welcome it because it’s better for our customers.” Might it be true? Was the Fed listening in? Good for tech sentiment if so.

Portfolio: We made no major changes to the portfolio this week.

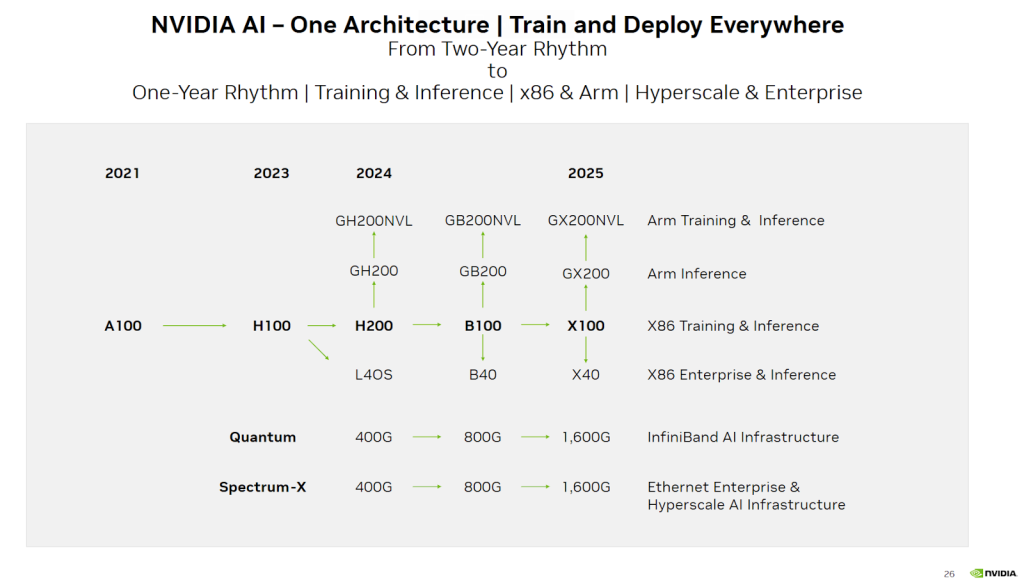

First up, Nvidia news (ahead of its results next week): In October, we noted that Nvidia’s latest Investor presentation included this slide detailing its latest roadmap and moving to a one-year “cadence” with its H200 chip (the “successor” to its current H100 “sold out” AI chip) coming in early 2024 and its subsequent B100 following later in the year.

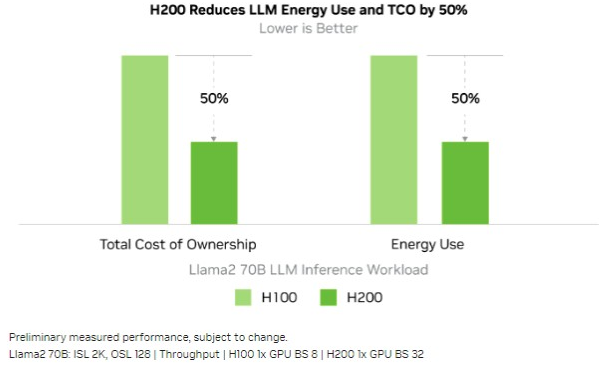

This week, Nvidia officially launched its H200, the first time we’d seen the chip’s full specs. The highlight is the inclusion of HBM3e (high bandwidth memory). We’ve spoken before about the importance of memory in the AI world – given the need to store and retrieve large amounts of data. The H200 is further evidence of that (see the performance and efficiency benefits of the extra memory below).

As a bit of background, AMD originally developed HBM for gaming, as they found that scaling memory to match increased GPU performance required more and more power to be diverted away from the GPU, impacting performance. HBM was developed to be a new memory chip with low power consumption. What was a problem for gaming has turned into much more of an issue in datacentres and AI, given the amount of data and speed necessary to store and retrieve in both training and inference.

That’s also what makes the advanced packaging (CoWoS – chip-on-wafer-on-substrate) so important – HBM and CoWoS go hand in hand, effectively enabling short and dense connections between the logic and HBM (more so than possible on a PCB) in turn driving up GPU utilisation and performance.

The H100 includes 96GB of HBM3 memory, whereas the H200 has 141GB of faster HBM3e memory. Beyond that, the chips are very similar (both are built on TSMC’s 5nm node). That makes the performance improvements (driven by the memory content) impressive.

It’s important because

- Nvidia has $11.15bn purchase commitments (and AMD $5bn), which relate primarily to CoWoS and HBM, which have become the main bottlenecks in the AI supply chain. That speaks to a significant barrier to entry both Nvidia and AMD have vs. competition – effectively securing supply of the bulk of TSMC’s capacity. We own both Nvidia and AMD – and TSMC (who have effectively had their CoWoS capacity expansion underwritten).

- It means that AMD’s strategy of stuffing its MI300X with HBM3 (a total of 192GB) – though we haven’t seen proper performance comparisons yet – looks credible. While we expect Nvidia to remain the dominant GPU supplier in training, we’ve said before that there are many reasons why AMD’s MI300X can be a credible alternative in GPU. We expect sales to be materially above their guidance next year.

- Capacity expansion for HBM at memory players (Hynix, Samsung, Micron) is even more important – which will be a significant driver of memory capacity expansion and capex spend on semicap equipment. Relatedly, there were reports late last week that SK Hynix plans to increase their FY24 Capex +50% yr/yr – we think DRAM capex has to be up in 2024, given such low spending levels in 2023. In September, Micron also revised its capex to be up yr/yr (August 2024 year-end), driven by a doubling of HBM capacity. While we don’t own any memory players in the portfolio, we do own semicap equipment players whose tools will be bought to build out this capacity (see also Applied Materials results this week below).

Onto more newsflow and results:

Microsoft’s infrastructure build-out in focus at Ignite

- It’s worth following the Nvidia H200 commentary with the main news from Microsoft’s Ignite conference this week. Nvidia CEO Jensen Huang was on stage alongside Microsoft CEO Satya Nadella (I’ll come to your investor day if you come to mine).

- We’ve commented before on the vast amount Microsoft is spending on its infrastructure build-out – their data centre capex will be >$30bn this year (fun fact: when adjusted for inflation, the Apollo space program cost approximately $25bn).

- This most significant slice of this is going to Nvidia H100 GPUs but Microsoft also announced that it will build its own AI chips, joining Google (TPU), Amazon (Trainium and Inferentia), both also hyperscalers offering their own ASIC to customers for AI workloads.

- There are two chips, Cobalt, a CPU, and Maia, an ASIC. ASIC chips are different from GPUs: ASICs are Application Specific Integrated Circuits – processors designed for a particular use, rather than for general-purpose uses and workloads. We’ve discussed before that GPUs are designed for parallel processing at a large scale across different workloads (effectively performing the same calculation over and over again) and are very flexible (that’s what makes CUDA on top of it very important because the flexibility also makes it quite complex to write parallelised software). ASICs are built to be more specific for the application – they are typically higher performance (like for like) but with a more limited scope in terms of workloads that can run on them.

- It makes sense for the hyperscalers to move downmarket to ASICs to run specific workloads, where they can run workloads more economically and also reduce their dependence on Nvidia chips.

- Is it bad news for Nvidia? No. We’ve said that we expect more chip winners in AI, given that no player wants to be tied into one dominant supplier, Nvidia. Given their high utilisation, extensive user-installed base, and specific use cases, the hyperscalers are motivated to build their own to handle specific workloads.

- It is also worth pointing out that, in addition to the partnership with Nvidia, Microsoft announced an expanded partnership with AMD, which is deploying the MI300X chip – we think Microsoft could account for over half of AMD’s MI300X revenue next year.

Portfolio view: We own Microsoft – outside of the chip companies benefiting directly downstream of capex, it will be the first company to see meaningful revenue directly from AI thanks to CoPilot. Its competitive moat – which was already high thanks to sticky B2B customers in its Office software business – gets sustained in the move to AI. We commented last week on Microsoft’s advantage in optimising GPU utilisation across its full stack of software products. That scale advantage also means it can optimise its chip stack too, including building its own to reduce further the cost of compute. It’s a perfect flywheel – scale that enables it to offer AI compute at lower prices, driving more innovation on top of its infrastructure and more AI use cases (a la OpenAI).

But there is no change to our view on Nvidia and AMD (both owned) – as we said at the start, they dominate a constrained supply chain and (worth noting that Microsoft’s chips will also be built at TSMC 5nm).

China and Nvidia chips

- Following on from our letter last week, which spoke to Nvidia’s H20 chip release specifically for the Chinese market, Tencent was very explicit about its stockpiling efforts of Nvidia chips on its earnings call:

- “In terms of the chip situation, right now, we actually have one of the largest inventory of AI chips in China among all the players. And one of the key things that we have done was we were the first to put an order for H800, and that allow us to have a pretty good inventory of H800 chips. So we have enough chips to continue our development of Hunyuan for at least a couple more generations. And the ban does not really affect the development of Hunyuan and our AI capability in the near future.”

- Alibaba meanwhile announced that it would no longer spin off its cloud business given “uncertainties created by recent US export restrictions on advanced computing chips”. To be clear, that doesn’t mean they haven’t stockpiled lots of chips too, just that the IPO filing “risks” section was likely perceived as too long for any investor to accept.

- Lenovo spoke to using the new Nvidia China chips within its products.

- Biden and Xi met this week at the APEC summit, though semis didn’t feature in any of the official scripts. TSMC founder Morris Chang is meeting Biden too (Chang is Taiwan’s representative). Could be quite an interesting semiconductor discussion if Chang and Xi meet in the corridor.

Portfolio view: There is a potential risk that you’ve had a large pull forward in demand from China that isn’t sustainable – Nvidia knew more restrictions were coming – we suspect they put a lot of China demand to the top of the queue and front-loaded those orders to get them through. What makes us relatively comfortable is that (1) we know – from Dell/Super Micro etc – that there is still a very significant backlog of Western demand, and (2) The H20 chip likely sustains that pattern of behaviour (order lots in case they get restricted too). It’s something we need to keep watching in the commentary, but for now, we think Western demand (for H100 and now H200) takes us to Q3/Q4 next year, which then takes us to the B100 refresh cycle. Given the political tail risk, we don’t own any Chinese companies, which makes the downside (to both the multiple and earnings) difficult to frame.

EV shift and semiconductor content increases playing out – all about China

- Infineon (owned) issued an overall “ok” set of results. Still, investor focus is all about its auto business, which continued to show resilience and is guided for double-digit growth again next year (after 26% growth this year).

- There has been a (we think unwarranted) bear case around Infineon’s auto business this year – with some investors arguing that demand would fall and pricing with it. This set of results is another data point showing that it is not happening.

- We think one of the issues in the market is that there is too much focus on the global OEMs (US/European/Japanese) who are all reporting higher EV inventory and sales struggling (ex Tesla). What the market needs to look more closely at is China. We spoke last week about the record-high October EV sales and higher-end models from Li Auto likely to challenge BMW et al.

- More China EV news this week as Xiaomi’s SU7 EV specs and pictures were leaked. Again, this is very much targeting the high-end (Tesla S and Porsche Taycan compare on performance) – we said last week the share shift away from international OEMs to Chinese – not just domestically in China – is likely to accelerate.

- As Infineon CEO said on the conference call: “We grow with the winners and also don’t forget the Chinese OEMs want to go into export and especially in the export situation, quality and reliability are key for them.” We don’t know yet but there is a reasonable probability that Infineon might be supplying Xiaomi with SiC components.

- An interesting data point: Infineon’s value in a high-end Chinese auto is more than €800 per car. If you do the maths on that, for the 3 million BYD autos that’s €2.4bn, vs the current China auto revenue of what we think is ~€2bn. It means that as the premiumisation story plays out in EV, the revenue growth potential for Infineon is significant. Auto OEMs are competing with content and features like the old smartphone world – it’s a multi-year driver for the semiconductor companies that supply into them (and unlike smartphones, these suppliers are designed for 7-year cycles).

- Infineon expects double-digit growth in its auto business next year, assuming flat (~1%) car production. We speak below on the difficulty of investing in semis markets that don’t have a structural content buffer, which makes you much more sensitive to volatile unit numbers. For Infineon, 8% unit growth in autos this year has translated to 26% top-line growth.

Portfolio view: Auto, along with AI, is a bright spot in semis end demand, with the structural increase in power semis in the move to EV. We continue to think the competitive environment for the global auto manufacturers is challenging – Tesla price reductions speak to that – and we don’t own any auto OEMs (manufacturers). But there are only a handful of auto semiconductor suppliers, which are designed over long cycles, and which can maintain pricing power – this makes it an attractive place to be in the value chain.

“Cost of money” and billings vs revenue

- Palo Alto (owned) beat the quarter on top-line and profitability but disappointed with their billings guidance.

- On the call, they spoke to a higher cost of money, resulting in more customers asking for deferred payment terms, discounting and deal finance terms on longer deal terms (~3 years). Effectively, customers that previously signed 3-year contracts are now asking for discounting on those, and instead of agreeing, Palo Alto is asking them to move to annual payments. That impacts billings (because deferred revenue is lower).

- The company argues this is only about giving customers flexibility: “You want to pay me, want me to do a three-year deal, you got to go finance it in benefits. I can do that, but I can say ‘just pay me on an annual basis, I’m okay.’ I’ll collect my money every year. If I go in that direction, my billing will change. It does not change anything in my pipeline, close rates or demand function. Those are my points. I think we’re going to keep having this debate, where you keep calling it guiding down on billings, I’m going to keep calling it flexibility, you want to keep calling it guideline downward billing, so I’ll keep telling it doesn’t change my numbers. So we agree that we will be saying that because I don’t – nothing has changed the prospects of Palo Alto of three months ago.”

- The big question is, “do we believe them?” From a demand perspective, Palo Alto has stood out in cyber security, benefitting from spend consolidation and outperforming the industry regarding growth (and market share) and profitability. The billings/revenue divergence is a dynamic we’ve seen in prior cycles (SAP had customers ask for terms around its maintenance revenue), and is something we’ve seen from peers – whom Palo Alto is still outperforming (Palo Alto guided for billings growth of 15-18% next quarter, while Fortinet will decline).

- Importantly, for a fast-becoming incumbent business, it continues innovating and capturing industry growth, growing completely new products and markets. Their next-gen security (NGS) business is a $3bn/quarter business (a little over three years old), still growing 50% yr/yr. That speaks to demand being better than billings implies (noting too that NGS contracts are lower duration in nature).

Portfolio view: Remove the billings guide (which doesn’t impact P&L or cash); the company is still the standout cyber stock regarding growth and profitability and is still a stock we want to own. For now, we believe them, though this shows they’re not immune from some of the broader spending trends we’ve seen. Indeed, this is something to watch for in the broader software space (where the same billing dynamic exists).

AI lifting network requirements but shorter-term enterprise spend caution?

- Cisco (owned) reported a fine set of results, but forward guidance was much lower than expected, with orders declining significantly: down 20% yr/yr.

- Cisco has been shifting its focus to software, and its software business has shown decent growth: +13 % year over year and $24.5bn ARR.

- The issue is in its product business – a better supply environment helped deliver orders to clients in the quarter just gone, but these orders are now being digested, with Cisco estimating its clients are carrying 1-2 quarters of inventory (effectively, clients are installing equipment, and not currently ordering more).

- While Cisco is moving more toward a software business model (something its Splunk acquisition will help with), the bulk of its business is still turns/orders. This means that when customers change their ordering patterns, Cisco feels it immediately.

- In short, the weaker macro combined with the normalisation of networking spending impacts Cisco more than we expected.

- Longer term, we expect Cisco to benefit from increased demands for AI networking.

- The big debate this year has been the extent to which Nvidia’s Infiniband (which it acquired as part of Mellanox) will become the de facto industry standard for AI workloads or whether ethernet (which Cisco sells) will be adopted for AI. The back end is important because that’s where much of the performance/power equation rests – more compute requires more network bandwidth and switching intensity and more demand for their products – GPU clusters used to run AI chats need about 3x more bandwidth than a traditional compute network today. Arista and Cisco are pushing ethernet and related tech, Nvidia is pushing Infiniband. Currently, we think Infiniband is ~90% of the AI market. Still, we expect ethernet to take an increasing share, which is part of our thesis for both Arista and Cisco (relatedly, we think the server racks for Microsoft’s Maia 100 dual source Arista and Cisco ethernet).

- Cisco management updated their AI networking commentary, with $1bn of AI orders (up from last quarter’s $0.5bn commentary) that could be realised in FY25.

Portfolio view: We own Cisco and Arista and see AI increasing networking requirements in the back end. In the same way that we expect hyperscalers not to want to be tied entirely to Nvidia chips, they will also want an alternative to Infiniband. Therefore, we expect ethernet to be adopted for some AI workloads, which will benefit both Cisco and Arista.

Semicap resilience, China export restrictions a headache

- Applied Materials (owned) beat on top and bottom line, and guidance was better than consensus.

- The more important news, which sent shares down 6% last night, was Reuters reporting (coincident with results) that they’re under investigation for having evaded export controls, shipping equipment to SMIC apparently via a subsidiary in South Korea. They received a subpoena from the DoC last year, which they disclosed in their 10K in October 2022 – it needs to be clarified whether there’s anything new in this.

- On results, they expect 2024 growth to be driven more by leading-edge logic (we’ve said before we think TSMC needs to increase 5nm capacity given demand – that goes back to the H200 news at the start and build out its GAA 3nm node).

- DRAM utilisation and pricing are improving. As we said, AI requires more DRAM (HBM) capacity. DRAM capex can go up a lot next year.

- There is weakness in industrial and softness in auto. They say they’re seeing lower utilisation here, which agrees with some of the industrial semis commentary and push-outs – again, that speaks to companies like TI/Microchip keeping utilisation low.

- Their long-term growth equation remains: semis growing > GDP, semicap > semis.

- Interestingly in the context of the Reuters report, they say they do not expect the updated export rules to have any impact – they expect Chinese demand to remain strong, given China’s domestic manufacturing capacity is structurally below its share of semis consumption – we’ve said before it makes a lot of strategic sense for Cina to build out meaningful capacity in trailing edge chips.

- Relatedly, China import data showed Q3 semicap equipment imports rose 93% yr/yr.

Portfolio view: A stellar set of results, but the Reuters article is undoubtedly unhelpful (note that this isn’t future revenue at risk, given SMIC is well out of numbers). We suspect this relates to shipments well back when SMIC was first added to the restricted list in 2020.

Semicap equipment (we own Applied Materials, LAM Research, ASML and KLA) remains a key exposure in the portfolio for us. There are multiple growth drivers which will support revenue growth out to 2030 –technology transitions, geopolitics, AI; and their business models and strong market positions in each of their process areas allow them to sustain strong returns and cash flows even in a relative downturn –Applied’s FCF doubled yr/yr in the quarter.

PC, smartphone and Android

- We commented last week on Hon Hai’s monthly sales data and the broader Android/iPhone ecosystem – with the conclusions being (very broadly) relief in the Android ecosystem and a still reasonably resilient (if unexciting) iPhone cycle.

- Taiwanese contract manufacturers Pegatron and Wistron also reported their October sales data ahead of usual seasonality, pointing to a relatively resilient supply chain picture.

- On the other hand, October Notebook shipments were down 24% mth/mth, so the strength we saw in Q3 could have been pull-forward. If that trend continues, it would contrast the positive growth view AMD and Intel both gave for Q4.

- Lenovo reported its Q2, in which it presented a more positive view on the PC replacement cycle for next year, driven by AI: “Our innovations such as AIPC, which features AI computing and private foundation models as smart devices, are expected to be a powerful driver for the PC replacement cycle from the second half of 2024.”

Portfolio view: The smartphone and PC semis content stories have played out over time (there is still premiumisation but at nowhere near the same level as 10-20 years ago). This means it’s all (mostly) about unit numbers, which is one of the reasons we don’t own ARM. As the October Notebook shipments show – while it looks like inventory has cleared and the cycle is bottoming – the recovery might not be straight. We look for markets with a structural growth buffer around semis content. As we noted above, auto units will grow 1% next year, Infineon will still grow double-digit, and auto units were up 8% this year. Infineon grew its auto business by 26% – that’s because the driver is more about increased semis content – meaning you’re much less exposed to potentially volatile unit numbers.

Finally, Google DoJ antitrust trial – who’s being sued again? Cookies and competition

- Google’s DoJ trial had quite an astounding statement this week. From Bloomberg: “Google pays Apple 36% of the revenue it earns from search advertising made through the Safari browser, the main economics expert for the Alphabet unit said Monday. Kevin Murphy, a University of Chicago professor, disclosed the number during his testimony in Google’s defense at the Justice Department’s antitrust trial in Washington. John Schmidtlein, Google’s main litigator, visibly cringed when Murphy said the number, which was supposed to remain confidential.”

- It’s an interesting data point. We know Google paid Apple $18bn back in 2021. And worth noting (often forgotten) that that is (along with App Store revenue) the most significant part of Apple’s service revenue line (and all profit).

- Maths suggests that Apple’s share of total TAC (traffic acquisition costs – the amount that Google paid to Apple as part of its agreement to be the default Safari search) is higher than Safari’s share of search revenue. Which effectively means that Apple demands a higher rate than Samsung.

- It might speak more to Apple’s power than Google’s, and still likely represents a big incentive for Apple not to build a search engine that competes with Google. It is confusing who is being sued and why.

- Regarding big tech and antitrust, Amazon and Meta announced a feature to let shoppers buy Amazon products directly from Facebook and Instagram ads. Broadly, internet cookies have been killed (or certainly degraded) in the name of privacy. What that might mean is the big tech platforms just reinforcing their own competitive advantages by giving Meta better data and Amazon more volume (cookies might have been bad for privacy but getting rid of them has killed competition).

Portfolio view: We stay away from politics. Any remedies implemented in the tech sector have had minimal tangible impact. We keep watching, but nothing materially impacts the status quo for now.

For enquiries, please contact:

Inge Heydorn, Partner, at inge.heydorn@gpbullhound.com

Jenny Hardy, Portfolio Manager, at jenny.hardy@gpbullhound.com

Nejla-Selma Salkovic, Analyst, at nejla-selma.salkovic@gpbullhound.com

About GP Bullhound

GP Bullhound is a leading technology advisory and investment firm, providing transaction advice and capital to the world’s best entrepreneurs and founders. Founded in 1999 in London and Menlo Park, the firm today has 14 offices spanning Europe, the US and Asia.