Tech Thoughts Newsletter – 24 November 2023.

Market: Thanksgiving is here, so we have a shorter and quieter trading week – two big pieces of tech news – OpenAI and Nvidia results.

Portfolio: We made no major changes in the portfolio this week.

First up, the most extraordinary weekend. We wrote about OpenAI and Microsoft (a top 3 position in the portfolio) in our 10th November letter, following OpenAI’s Developer Day. We talked about the two-way nature of the relationship – the idea that Microsoft benefits from OpenAI via its Azure business and that OpenAI benefits from Microsoft’s ability to optimise its infrastructure and give OpenAI access to cheaper compute. In hindsight, there was a lot about that relationship and the big risk to Microsoft that we missed.

A background on what happened:

- On Friday, CEO of OpenAI Sam Altman was fired by the board

- Over the weekend, there were rumours that Sam would be hired back to OpenAI

- When we woke up on Monday morning, OpenAI had hired a replacement CEO and Microsoft CEO Satya Nadella had announced that Sam would be joining Microsoft “together with colleagues”

- Finally (probably not finally) on Wednesday morning, it was announced that Sam would, in fact, be returning as CEO of OpenAI, and that there would be changes to the board (likely including a Microsoft board seat)

Firstly, we overlooked the risk around OpenAI’s corporate governance structure in hindsight. Typically, in tech, dual share class structures mean that boards are powerless, and investors are beholden to the whims and fantasies of CEOs and Founders who have too much power (Mark Zuckerberg and Metaverse spending, Adam Neumann and WeWork). For OpenAI it was the opposite: it was a for-profit business controlled by a not-for-profit board. Its main investor, Microsoft, had no board seat, no control, no visibility, and its incentives (profit for shareholders) were misaligned with OpenAI’s board’s “mission” (from OpenAI’s filing: “OpenAI’s goal is to advance digital intelligence in the way that is most likely to benefit humanity as a whole“).

If OpenAI were a for-profit business, the analysis in the letter two weeks ago that Azure benefits from having more workloads and OpenAI benefits from having access to cheaper compute would have been valid. But it isn’t – OpenAI’s board did something unexpected because they weren’t driven by commercial success. It meant that Microsoft’s investment, and the fact that it was building OpenAI into so many of its product roadmaps was a significant risk. Indeed, we spoke last week about its Maia chips, which were optimised for running OpenAI models. It’s a risk that we, and presumably Microsoft, too, did not see.

Except for one critical thing – building AI is very expensive and Microsoft has access to vast amounts of compute capacity and lots of Nvidia GPUs (probably even more important than its cash). That is why Sam Altman needed to raise money (or Azure credits) from them in the first place. The fact is that OpenAI – even if a not-for-profit board runs it – still needs a lot of money and compute capacity.

It’s why Microsoft was able to get itself into a win-win situation. It had/has leverage over OpenAI – over and above the board – because OpenAI still needed its infrastructure and access to GPUs. So on Monday morning, it looked not only like Microsoft would have access to the underlying IP of OpenAI – the complete source code and model weights – but that it was also going to get all of OpenAI’s talent (this quote from Nadella this week particularly stood out: “AI talent wants to go where there is significant compute”) and what seemed like a potential disaster was turning into a win for them.

As it stands now, Sam won’t be joining Microsoft, and the status quo that existed last week with Microsoft as a partner and investor in OpenAI remains. We suspect that there will be a lot of changes in the future and that those will benefit Microsoft (or at least remove a lot of the now-known unknown risks associated with its partnership with OpenAI). Microsoft will have a bigger hand in OpenAI’s governance in the future. Nadella has said that they want “governance changes”, and OpenAI has already made changes to its board. We suspect Microsoft itself might end up with a board seat.

There is also an argument that, versus effectively owning OpenAI, Microsoft benefits from being at arm’s length from OpenAI concerning regulation and, at least, giving the perception of remaining platform and model-agnostic.

We still don’t know why Altman was fired, and the most important question is whether the board was managing a genuine risk around AI.

That Microsoft’s leverage over OpenAI was tied up in Azure credits and GPU access is a good segue to the other big news of the week – Nvidia’s results (also a top 3 position in the portfolio).

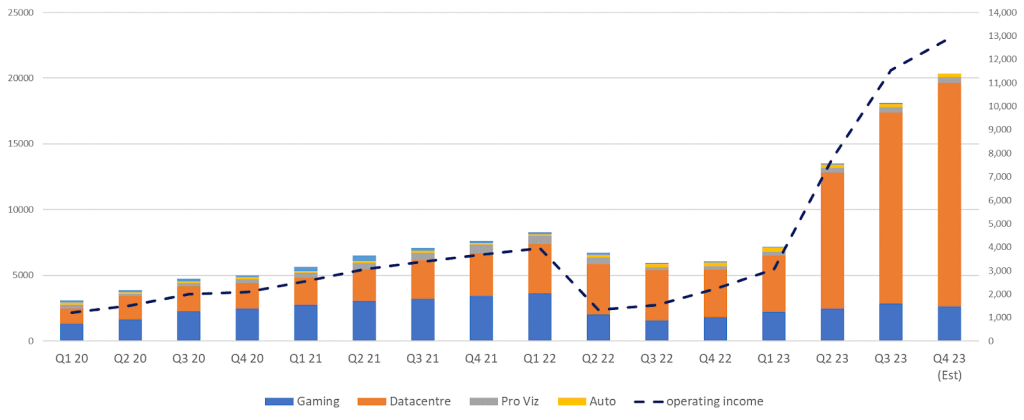

Nvidia reported another blowout quarter, exceeding market expectations and guiding significantly ahead of expectations. Below, we show Nvidia’s quarterly revenue and operating profit trajectory and our forecast for the next quarter. The beauty of Nvidia’s fabless business model is the leverage – $13bn incremental revenue yr/yr is coming with less than $250m incremental opex – the top line drops straight through. It has led to such significant EPS growth, leaving Nvidia at reasonable forward valuation levels despite the general market perception.

Nvidia is currently supply-gated – we continued to hear from many companies through Q3 earnings calls that they were waiting on supply of Nvidia GPUs, which are currently sold out. The cloud hyperscalers have all announced huge AI-driven capex plans, which they continue to guide upwards into 2024, and enterprise software businesses have all added AI capabilities to their offerings. Every CEO in every industry is trying in some way to either build or gain access to Nvidia GPU capacity.

Our above-consensus view (we think Nvidia can beat next quarter’s guidance) continues to be focused around supply upside coming from node shifts and freed-up capacity at TSMC. This, as well as better pricing, is still playing out, and we see further upside as TSMC continues to accelerate its build-out advanced packaging (CoWoS) capacity. TSMC’s October revenue (+35% mth/mth from September and up 16% yr/yr) was an excellent leading indicator of Nvidia’s GPU demand filling in 7nm and 5nm nodes, and the following data point we’re watching for is TSMC’s November release.

A limited supply of wafer capacity at TSMC speaks to a significant short-term competitive advantage for Nvidia – on its balance sheet this quarter were $17bn purchase commitments, which we think relate mainly to CoWoS and HBM, which have become the main bottlenecks in the AI supply chain.

The earnings call didn’t contain a considerable amount we didn’t already know (which is no bad thing), and the key question the market is whether this level of demand is sustainable. There are many ways to frame the shifting opportunity in AI. Jensen Huang has spoken before about the idea of a $1tn of infrastructure spend ($250bn a year) which needs to shift to accelerated compute (to GPUs, to faster CPUs, to lower latency and higher throughput networking equipment). If you think about Nvidia’s current revenue (its data centre business will be ~$17bn in revenue next quarter), it’s clear that we’re still in the very early innings of this shift.

This earnings call was the first time he also spoke more specifically about the changing user behaviour (we might have seen lots of large language models released, but do we know how to use them and how to use them to interact with existing software? That’s perhaps informed by the release of Copilots and Agents in the software space over the past several months, including OpenAI at its DevDay. Jensen Huang said:

Generative AI is the largest TAM expansion of software and hardware we’ve seen in several decades. At the core of it, what’s exciting is that, what was largely a retrieval-based computing approach, almost everything that you do is retrieved off of storage somewhere, has been augmented now, added with a generative method. And it’s changed almost everything. You could see that text-to-text, text-to-image, text-to-video, text-to-3D, text-to-protein, text-to-chemicals, these were things that were processed and typed in by humans in the past. And these are now generative approaches. And so we’re at the beginning of this inflexion, this computing transition.

The only wrinkle continues to be China, where management commented that sales to China will drop significantly next quarter. While we’re still expecting the release of the new H20 chip (that passes under the restrictions), no explicit timeline was given.

We’ve commented before that there is a potential risk that you’ve had a large pull forward in demand from China that isn’t sustainable. Nvidia knew more restrictions were coming, and we suspect it put a lot of Chinese demand at the top of the queue and front-loaded those orders to get them through. What makes us relatively comfortable is that (1) we know – from Dell/Super Micro – that there is still a very significant backlog of Western demand, and (2) The H20 chip likely sustains that prior pattern of behaviour (order lots in case they get restricted too). We need to keep watching it in the commentary, but for now we still think western demand (for H100 and now H200) takes us to Q3/Q4 next year, which then takes us to the B100 refresh cycle.

What do Nvidia results mean for the rest of the tech?

Fundamentally, this set of results gives us another datapoint that the AI infrastructure build is an area of the semis market you want to be exposed to, with a long runway of growth and supply constraints which will likely continue to support healthy pricing in the mid-term.

Nvidia’s position as the leader in GPU is clear, but this will also benefit players across the AI value chain. We’ve commented before that the extent to which Nvidia is currently supply-constrained is beneficial for AMD’s (which we own) entry into the GPU space with its MI300X chip. Undoubtedly, no customer will want to be tied to one powerful provider (and it’s no secret that Nvidia chips are costly). TSMC makes both Nvidia and AMD chips on its 5nm node, the networking infrastructure around it (we own Cisco and Arista Networks), and the semicap equipment makers will ultimately benefit. These chips will all be made on the most advanced nodes.

For enquiries, please contact:

Inge Heydorn, Partner, at inge.heydorn@gpbullhound.com

Jenny Hardy, Portfolio Manager, at jenny.hardy@gpbullhound.com

Nejla-Selma Salkovic, Analyst, at nejla-selma.salkovic@gpbullhound.com

About GP Bullhound

GP Bullhound is a leading technology advisory and investment firm, providing transaction advice and capital to the world’s best entrepreneurs and founders. Founded in 1999 in London and Menlo Park, the firm today has 14 offices spanning Europe, the US and Asia.