Tech Thoughts Newsletter – 28 December 2023.

It goes without saying that the story of 2023 has been AI. Just about every analyst day turned into an “AI day” with big bonus points if you could get Nvidia CEO Jensen Huang to join you on stage.

2023 has seen a huge amount of investment in AI infrastructure (and there would have been even more, were it not for chip supply shortages). With that, access to AI compute and large language models – predominantly via the hyperscalers’ cloud services – continues to get cheaper. The extent to which the investment around training large language models will result in an installed base of depreciated GPUs which can be re-utilised for low cost inference is good news overall – It opens up more innovation and more experimentation and it ultimately means we’ll get to those new feasible revenue and business models much more quickly than prior innovation cycles.

There’s lots we don’t know yet about AI – which business and revenue models will work? Which won’t? We’re sure a new “FAANG” acronym will emerge as new AI-first business models are founded (it’s worth considering that Uber was founded in 2009, 2 years after the iPhone launched). Will there be a new hardware form-factor for AI? (will it be Apple’s Vision Pro?); And is there any standalone value in LLMs? 2023 saw a proliferation of large language models and different strategies – from Microsoft’s all-in on OpenAI (tactician of 2023 has to go to Satya Nadella for navigating that mishap..) to Meta’s open sourcing Llama to Amazon’s LLM-agnostic pitch.

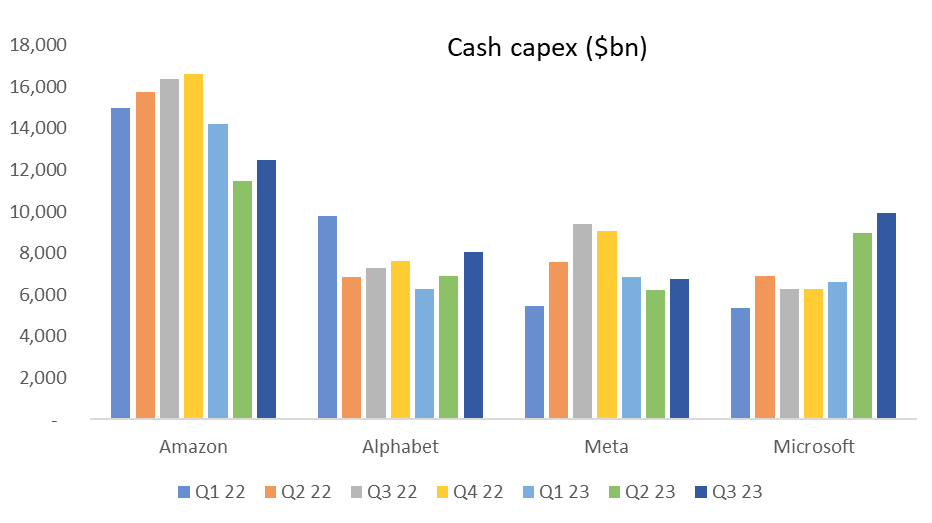

But there’s also lots we do know about AI: the investment companies are making and what that’s ultimately being spent on, and that’s where we try to focus the portfolio. Big tech and the cloud hyperscalers have all announced huge AI-driven capex plans – and enterprise software businesses have all added AI capabilities to their offerings. We know every CEO in every industry is trying in some way to either build or gain access to Nvidia GPU capacity. We think hyperscaler capex will amount to ~$160bn in 2023 – the level of spend has been astounding. And they have all explicitly guided to capex going higher in 2024. When we think about each of Google, Amazon, Meta and Microsoft – they are all in a position to continue to invest – all have large cash balances and an ability to sustain high levels of investment over time. What’s more, for each of them, renting out GPU capacity is a >20% ROIC business – high capex spend is monetised almost immediately, which is one of the reasons we expect it to be sustained (much more than the router/server/fibre capex investment of the early 2000s)

In the portfolio we’ve benefited significantly this year from the capex spend on servers and networking infrastructure through the increased semiconductor content – the servers that are used in training and inference have a huge amount more semiconductor content in them and are multiples the cost of standard enterprise servers: More and faster CPUs and GPUs, more advanced networking, and further down the chain benefitting the semi cap equipment companies which are all needed to make these leading edge chips.

We think these drivers will continue in 2024, but the AI beneficiaries will naturally extend further – we think for enterprise software the key will be the extent to which they can get price per seat uplift within their businesses for AI features and services, and drive higher and sustained growth rates. We’ve recently initiated positions in the cloud consumption plays, which we believe will be the next to see a benefit from increased CIO budget allocation towards companies’ AI data strategies.

In terms of portfolio performance, we’re now close to the end of the year, and the portfolio is up 45% YTD. Salesforce, Nvidia and AMD were the top contributors, though each contributing just 4% to that 45% – so really performance was broad-based across our portfolio names.

Onto our investment themes and things to watch in 2024:

AI and Big Tech – sustaining innovation…

- Capex plans aside, AI and how it will impact big tech has been the key debate for 2023 – and that will surely continue into 2024. Three things we think will matter.

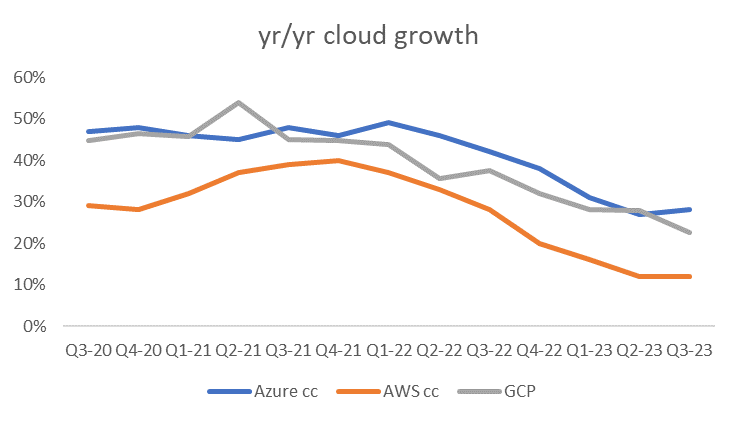

- The first is the cloud opportunity for each of Microsoft, Amazon and Google. Each effectively resells compute capacity, and to the extent that AI compute is going up – as businesses look to train models and run inference – they should all benefit.

- While it’s hard to disentangle the “spend optimisation” headwinds of 2022/early 2023 abating and new AI workloads driving revenue upside, we think all are seeing a tangible revenue benefit coming from AI – Microsoft last quarter pointed to 3 points of Azure growth coming from its AI services.

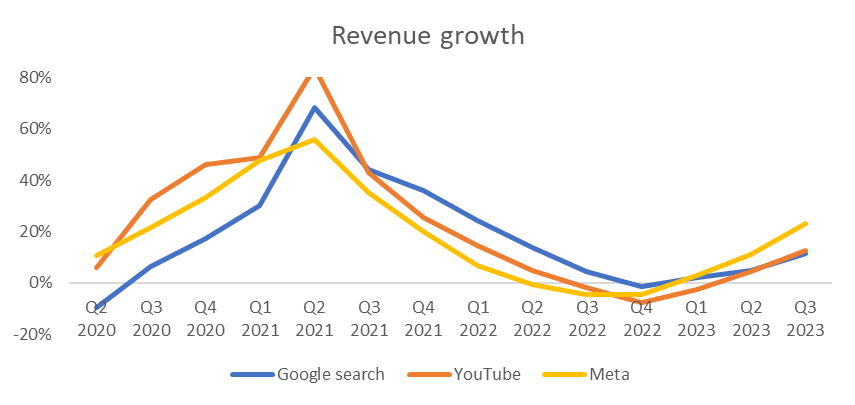

- The second is the where big tech can apply AI to their existing businesses. For Google and Meta, it’s increasingly difficult to disentangle AI with their core business performance. To the extent that they can apply AI to their very large advertising businesses, they were arguably the biggest short term big beneficiaries of AI. Both have integrated AI into their advertiser tools to increase ad performance, which helped their ads businesses return to growth in 2023:

- Spend which went away as a result of lower performance in a post-ATT world (Apple’s App tracking transparency – which effectively stopped the use of third party cookies used to track users across the web) is back, and some – with AI tools around targeting and optimisation seemingly mitigating that ATT impact for advertisers.

- And the third, but perhaps the most important – and which will surely drive performance in 2024 – is who can turn AI into an incremental or new revenue opportunity.

- For us, Microsoft has the standout incremental revenue opportunity from AI. We’ve talked before about the maths of Microsoft 365 Copilot – $30 per month applied to a potential user base of ~400m. It’s a $144bn potential business. That’s like adding more than a Meta, and close to Google’s search business – to your revenue base…

- The biggest debate around big tech AI disruption this year has clearly been centred around AI and search, and the impact on Google’s revenue model. Do blue links and AI go hand in hand? Does the search interface get replaced by a chatbot?

- AI requiring a potentially new form factor brings Apple into focus with its Vision Pro headset, the first new Apple product category since the Apple Watch.

- More broadly there have been lots of questions around Apple as the “less obvious” AI play in big tech. But, if you think about more inference happening locally, and on the end device (ChatGPT released their app on the iPhone first), you can argue for Apple as a big beneficiary (effectively the biggest and best reseller of compute)

- And shifting AI workloads to the edge where you can (either at the network edge or end device) makes sense too – both from a cost and a latency perspective, and indeed could be a driver of a new smartphone/PC upgrade cycle.

- Hardware/software integration as an advantage remains a key factor in tech – and continues to factor into our thinking on AI. With each of the hyperscalers having their own proprietary chip solutions, will there be optimisation around LLMs and the silicon they’re run on? Each of Google, Meta, Microsoft and Amazon have hardware businesses – does the ultimate success of LLMs depend more on whether they’re the underlying source code for other apps running on their custom hardware?

Portfolio view: Microsoft is our largest portfolio exposure within the big tech names, given we view it as the most likely to see tangible and immediate revenue upside with Copilot. We also own Google and Amazon – we think there are reasons to believe that Google’s embedded advantages around search will allow it to defend its revenue model, and Amazon owns the biggest cloud business in absolute dollar terms – which will benefit from continued growth in AI workloads.

We don’t own Meta, given the structural challenges around the top line and competition in digital advertising, although we do recognise an impressive return to growth and Zuckerberg’s momentous pivot to focus on margins this year (vs. the free for all spend on the Metaverse last year…)

Lastly we initiated positions in Snowflake and Datadog – which we expect to be further downstream beneficiaries of cloud consumption and AI growth.

All about chips… supply constraints to continue

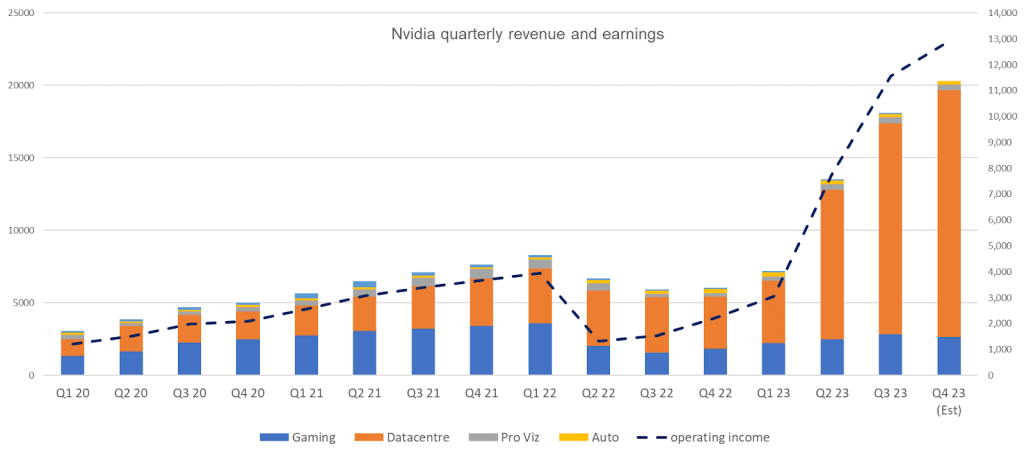

- If 2023 was all about AI, it was really all about Nvidia – by far the biggest direct revenue beneficiary of AI this year:

- We think Nvidia will remain the AI industry standard in 2024, but with supply still constrained (everyone wants more Nvidia chips than TSMC can make) we think AMD’s MI300X GPU competitor will gain impressively from a standing start (and come in materially ahead of its $2bn guidance). In November Dell gave the most recent commentary around 39-week lead times for Nvidia GPUs – while supply is increasing, so is demand..

- Nvidia will launch two new chips in 2024 – with its H200 chip (the “successor” to its current H100 “sold out” AI chip) coming in early 2024 and its subsequent B100 following later in the year.

- All of the chips are made at TSMC’s 5nm node, which itself remains a big supply constraint. Beyond that, the inclusion of HBM3 (high bandwidth memory) – given the need to store and retrieve large amounts of data, which comes with the need for advanced packaging (CoWoS) remains a further constraint for the industry.

- Nvidia has $11.15bn purchase commitments (and AMD $5bn) which we think relate mostly to CoWoS and HBM, which have become the main bottlenecks in the AI supply chain.

- That in itself speaks to a significant barrier to entry both Nvidia and AMD have vs. competition – effectively securing supply of the bulk of TSMC’s capacity. We own both Nvidia and AMD – and TSMC (who have effectively in turn had their CoWoS capacity expansion underwritten).

- Each of the hyperscalers will continue to launch and build their own chips – they have enough utilisation and specific use cases and specific workloads to apply to specific ASICs (Application specific integrated circuits). And there’s no doubt that no player wants to be entirely tied into one very powerful supplier in Nvidia. It’s important to note though that they’re all building ASICs and not GPUs – these have much higher performance but are very specific to the application and with much more limited scope in terms of workloads that can run on them.

- Our view is that while ASICs will rebalance some of the workloads, AI GPUs will remain the significant majority of the market. A big part of why is that we’re still so early on in AI use cases and therefore the flexibility that comes with a GPU vs a custom chip is much more important. It’s not to say that there won’t be a point in the future when you see more ASIC deployments – that will likely come with more stabilisation in the applications and algorithms that are run on chips (telecom base stations are run mostly on ASIC equivalents – that speaks to the maturity of that market). But in the short to mid term we expect GPUs – where Nvidia and AMD are really the only credible offerings – to represent the bulk (90%+) of AI infrastructure, and for ASICs not to cannibalise GPU workloads.

- Thinking more broadly about AI infrastructure and beneficiaries, networks supporting large language models need to be lossless, low latency and able to scale – we think that means fuller, more frequent network infrastructure upgrades which the whole ecosystem is benefitting from.

- The back-end networking infrastructure is important because that’s still where a lot of the performance/power equation rests – more compute requires more network bandwidth and switching intensity – GPU clusters used to run AI chats need about 3x more bandwidth than a traditional compute network today.

- Currently we think Nvidia’s Infiniband is ~90% of the AI market, but we expect ethernet to take increasing share, which is part of our thesis for both Arista and Cisco – a bit like GPU dependency, customers likely not wanting to tie themselves into Infiniband (Nvidia’s proprietary solution)

- And we think not only will you see demand driven by the initial build of infrastructure,but also a continued refresh cycle as chips and networking become more efficient (and therefore cheaper to run) in the years ahead.

Portfolio view: We own Nvidia and AMD, and expect them to continue to be the biggest beneficiaries of downstream capex spend in 2024. Both make their chips at TSMC, which we own, and further downstream we expect to benefit from sustained capex spend through our semicap equipment exposure (we own ASML, LAM Research, KLA and Applied Materials). On the networking side, we own Broadcom, Arista and Cisco. And on the DSP (Digital signal processors – sitting alongside GPUs) side we own Marvell (who has additional opportunities in some of the custom silicon ASICs built by the hyperscalers)

EV – China price war and subsidies, good for chips, bad for auto OEMs

- Within semis, we continue to focus on structurally increasing content stories. As we noted above, that is playing out in AI (the move from traditional to accelerated servers results in a magnitude increase in GPU, CPU and networking content) and it’s also playing out in Electric Vehicles.

- Moving from combustion engine to electric results in as much as 3x the dollar semiconductor content per vehicle, with the biggest content gains coming from the drivetrain and power semiconductors.

- We think one of the issues in the general market analysis of the auto market this year has been too much focus around the global OEMs (US/European/Japanese) who are all reporting higher EV inventory and sales struggling (ex Tesla).

- What the market doesn’t look closely enough at is China. China EV was really the one bright spot in otherwise very damp Chinese economic data in 2023. While admittedly helped by continued market subsidies, China’s EV penetration is set to reach close to 40% by year end and it will be by far leading the world in terms of the move away from combustion engine vehicles.

- This year we’ve seen consistent beats coming from Chinese EV export numbers, and a significant number of higher-end Chinese model launches, likely to continue to challenge the global auto OEMs (BMW, Porsche). That means the share shift away from international OEMs to Chinese EV – not just domestically in China from ICE to EV – is likely to accelerate. Indeed not only are we likely to see a share shift from international OEMs to Chinese but an accelerated destruction of the combustion engine market as a whole…

- What’s more, auto OEMs are competing with content and features like the old smartphone world – it’s a multi-year driver for the semiconductor companies that supply into them (and unlike smartphones, these suppliers are designed in for 7 year cycles).

- It all means Infineon is expecting double digit growth in their auto business next year, critically – assuming flat (~1%) car production. For Infineon, 8% unit growth in autos in 2023 translated to 26% top line growth.

- We note below the difficulty with investing in semis markets that don’t have a structural content buffer, which makes you much more sensitive to volatile unit numbers – it’s why we’re much more comfortable with auto semis exposure.

- In 2023, the European Union launched an investigation into the Chinese subsidies for electric vehicles, which they argue (probably correctly!) is distorting pricing in the market. The comparison made was with the European solar industry, where state-backed Chinese competition killed the industry.

- We think the barriers to entry and pricing power in an EV world are even lower than the traditional combustion engine market, which has historically been a difficult industry in which to make a sustainably high return – we expect to continue to see price wars, regardless of the conclusions of the European investigation. The reality is that European EV production targets is also a recipe for a race to the bottom in terms of pricing.

Portfolio view: we own Infineon and NXP. We continue to think auto – along with AI – is a bright spot in semis end demand, with the structural increase in power semis in the move to EV. We continue to think the competitive environment for the global auto manufacturers is tough – Tesla price reductions speak to that and we don’t own any auto OEMs (manufacturers). But there are only a handful of auto semiconductor suppliers, which are designed in over long cycles, and which can maintain pricing power – it makes it an attractive place to be in the value chain.

And in smartphone and PC, inventories normalised, but beware end demand..

- Elsewhere in semis, we ended last year in the midst of broad end-market weakness and high inventory levels and destocking..

- Days of inventory had reached all-time highs (higher than 2000 and 2008), which led to many companies having to reduce utilisation levels to historic lows. Finally in Q3 this year, we started to see both stabilisation of end demand in PC and smartphone as well as a return to more normal inventory levels. The only areas of real weakness in the market now is industrial (especially China) and an ongoing server/enterprise inventory correction (which we should be through in Q4/Q1). But with the biggest market (smartphone) turning, we think we’ll see good broad market growth in 2024.

Portfolio view: Despite the market bottoming, we still don’t like specific exposure to the PC and Android markets (unlike auto and AI, the content story in both is over, and both are fairly fully penetrated unit-wise). The Android market is still especially tough to call for US component suppliers given no one is supplying into Huawei (since it’s on the restricted list). Huawei will also launch a low end Nova 11 SE soon, which will likely be equipped with another “homegrown” chip (though downscaled from the Mate 60 Pro, it will still likely be 7nm..) Though one thing we do consider as a potential upside driver is around AI compute at the edge driving an upgrade cycle in smartphone and PC.

One of the big question marks around end demand in 2024 remains China. Outside of autos, the expected recovery in demand after the COVID reopening was much slower than anyone hoped for or predicted, and the economic data continues to be mixed. It looks like companies have been reluctant to hire and consumers in turn have been reluctant to spend. For consumer tech it could remain a significant headwind to demand.

Semicap resilience – AI demand, tech leadership and geopolitics

- Semicap equipment spending remained resilient in 2023 – down slightly from a high spend year in 2022 but with leading edge logic and memory spend weakness offset by high demand for the trailing edge, particularly China

- For 2024, we expect the market to grow again (single digit, second half driven), with leading edge logic helped by the build out of AI capacity; China continuing to spend at the trailing edge; and – perhaps the most important swing factor – memory spend returning after the worst downturn in a decade (again helped by AI-driven HBM demand).

- We’ve spoken before about the semiconductor content increases moving from a traditional enterprise server to an AI server – the most significant increase is in GPU (which typically isn’t present at all in a traditional server) but there is also a significant memory upgrade – 6-8x more DRAM content.

- HBM (High Bandwidth Memory – which is required in AI given higher processing speeds) die sizes are twice the size of equivalent capacity – that’s important because higher die sizes naturally limit industry supply growth, and ultimately needs more semicap equipment..

- 2023 also saw Intel cement its ambitions to return to leading edge manufacturing with their foundry model, with its Arizona fab to ramp its 18A (broadly equivalent to TSMC 3nm) in 2024, which importantly means building out its EUV (ASML tool) capacity – and we think Intel will be a significant driver of orders for ASML over the next 2-3 years, as it’s forced to invest to try to catch up for the last 10 years of underinvestment.

- It’s important not only because they are the biggest capex spender and so in most cases the biggest customer of our semi cap equipment companies, but also because we think it will drive investment and spend across the industry. Each of Intel, TSMC and Samsung needs to continue to invest or risk falling behind..

- While US export controls are preventing China from receiving leading edge machines, they can still receive equipment to build out trailing or more mature node technology. Our view is that it makes a lot of sense for China to try to build up a very strategic position in trailing edge semis. The reality is that there is a structural supply shortage in trailing edge globally; these trailing edge semis are critical (in autos, industrial) – that was clearly borne out by the shortage during the pandemic; and it is only really TSMC who have meaningful supply outside of China (Global Foundries is still relatively small in terms of capacity). It’s also an area the US has – and continues to (even with the CHIPS act) – bizarrely ignore, after Intel sold off or reused most of its trailing edge fabs decades ago.

- China building up critical supply not only for its domestic demand but also which the US needs for products US consumers buy – would also put them in a reasonably powerful strategic negotiating position in the continued China/US trade “war” (see below).

- It’s important because it means that we think the current high level of semicap equipment revenues coming from China is sustainable, and it’s part of our above consensus view on the space.

- The other big factor in semicap equipment for 2024 is likely to be the continued battle for tech sovereignty. Governments around the world have decided building and subsidising local fabs is a good idea. We’ve said before that ex the geopolitical risk in Taiwan/China, it makes very little real sense! But semis and geopolitics continue to be intertwined.

- In the US, all of TSMC, Samsung and Intel have ambitious plans for fab build outs at the leading edge. TSMC, Bosch, Infineon and NXP announced in a joint statement that they would build a €10bn fab in Dresden, Germany andIntel announced a huge €30bn investment in Germany.

- It shows that tech sovereignty is not just a US phenomenon. The European Chips Act is a ~€43bn total package. We also saw subsidies from Israel (Intel) and Japan (Micron) announced this year. More localised fabs ultimately means more semicap equipment and it remains one of the drivers of our above consensus view on the space.

- The country we’ll be watching most carefully in 2024 is India. Micron was the first this year to announce large scale investment which came with a 70% buffer from both the central and state governments in India – it remains a potentially huge semiconductor production market outside of China/Taiwan.

Portfolio view: Semicap equipment (we own Applied Materials, LAM Research, ASML and KLA) remains a key exposure in the portfolio for us. As we’ve noted above, there are multiple growth drivers which will support revenue growth out to 2030 – technology transitions, geopolitics, AI; and their business models and strong market positions in each of their process areas allow them to sustain strong returns and cash flows this year even in a relative downturn.

China and the US… a complicated relationship likely continues

- China and the US remained in focus, with the export rules from October 7th 2022 updated again a year later in October 2023 – focused around AI.

- The updated restrictions followed on from the release of SMIC/Huawei’s 7nm smartphone chip which brought into question whether existing entity list restrictions had been circumvented by China.

- The rules introduced in October relate not to interconnect but to performance density, measured in flops per square millimetre, which specifically prevents workarounds and effectively stops chip companies scaling by adding more chips to get the same performance.

- That meant that Nvidia’s A800 and H800 – the “slowed down” chips specifically developed to clear the 2022 export rules, were no longer allowed to be shipped into China.

- Nvidia responded by releasing 3 new China chips – the H20, L20 and L2, which are basically designed to get inside the performance density and peak performance constraints in the new rules. It’s no real surprise that they continue to want to try to have product going into China, and remember Nvidia’s CUDA ecosystem is highly relevant in China – it’s still a significant moat for them as long as they can supply chips (since CUDA only runs on Nvidia chips) and by having a solution it ensures CUDA can remain the dominant framework

- Huawei’s Ascend GPU is China’s best domestic competitor (built on SMIC’s 7nm) and Huawei’s CANN architecture is a competitor of CUDA, but it’s still not clear how much supply SMIC will be able to produce at 7nm, and whether they could ever get down to 5nm without EUV tools remains uncertain.

- In addition to the specific chip restrictions, further measures were put in place to prevent Chinese companies getting access to chips via overseas subsidiaries or suppliers based in countries outside of China where there aren’t any export restrictions.

- The restrictions on semicap equipment were also strengthened but still relate to the very leading edge nodes. Remember the critical EUV technology (ASML machines) has been banned from China since the Trump administration. The best explanation we have on the semicap changes is from ASML and LAM’s results comments, both of whom suggested that the update was very incremental. ASML noted that it will impact “a handful of Chinese fabs” and estimated 10-15% of its current China shipments wouldn’t fall under the new rules. While LAM said that it didn’t see “any material impact” from the new regulations.

Portfolio view: The US export restrictions are guided to be reviewed on an ongoing basis – so there’s no doubt we’ll see further noise and restrictions through 2024.

Nvidia’s new “China” chips will start to ship in 2024 – and the H20 chip is a comparable die size and still consumes CoWoS supply, so it means supply likely remains as constrained as ever.

On the semicap side – the biggest restriction continues to be around EUV, and that has been in place since the Trump administration – so little new news. And the 2022 semicap restrictions have been much less harsh than had originally been assumed by the companies themselves. While US export controls are preventing China from receiving leading edge machines, they can still receive equipment to build out trailing or more mature node technology. As we noted above, our view is that it makes a lot of sense for China to try to build up a very strategic position in trailing edge semis.

Enterprise software – budget scrutiny to continue? AI upside to ARPU?

- Enterprise software demand has held up well in 2023 and most impressively companies have shown an ability to cut costs without hurting top line. Salesforce has surely been the standout example this year – its execution and ability to increase operating margin without hurting growth has been amongst the most impressive stories in software this year.

- Budgets broadly got tighter which led to spend consolidating. 2024 budgets remains uncertain – likely dependent more broadly on the macro and the funding environment (see also our comments below on billings and contract durations)

- The key question for us in software next year will be when and if AI starts to be a meaningful revenue generator for software companies, rather than the current cost of doing business.

- Several of our holdings have announced explicit monetisation plans for AI – and AI as an enterprise opportunity makes sense. Established enterprise software businesses who are able to (1) leverage very large installed bases to upsell AI features; (2) have large cash balances to invest in AI; (3) have the right business model for AI to match the increased cost – ie. subscription

- And for enterprise software customers it makes much more sense to get AI capabilities via their existing platform software providers than to try to implement it themselves.

- The LLM landscape and enterprise software is still uncertain – whether there are smaller models built around specific software verticals, or whether by effectively letting the pretrained model access your own proprietary data (whether that’s enterprise data, customer data), companies can gain a competitive advantage.

- More broadly in software we’re doing some digging around contract durations and the impact on billings/cRPO. It seems to us that there were a few more datapoints towards the end of the year that suggest we’ve been through a period of companies securing sales with multi year discounts, which customers were happy to do when money was free; which made billings look good (but hurts revenues down the line) and which is now rolling off. Ultimately rolling back to shorter duration contracts doesn’t impact the P&L and earnings, but it’s important to scrutinise why any metric might be deteriorating and what it might say about the underlying demand.

Portfolio view: We ultimately think AI will lead to more consolidation of spend in the space, and those “platform like” software businesses (We own Microsoft, ServiceNow, Salesforce, Workday and Palo Alto) are the best positioned both from a scale of investment and from a product set perspective (with an ability to leverage AI tools across a broad product suite).

We would note that nothing’s for free – all of these businesses will likely need to either increase capex to build out their own infrastructure, or buy AI compute capacity from cloud providers, which we’ll likely see impact their gross margin. In the context of their existing businesses, AI is still small, so we’re not seeing that yet, but it’s something to watch, and key for us in the portfolio is those businesses that can generate a meaningful and sustainable return on invested capital from those AI investments.

Streaming – advertising, re-bundling, churn and content costs

- Streaming continued to be quite a tough market in 2023 – the industry continuing to battle with structurally higher churn and high content costs (amortised over lower subscriber numbers) in a non-zero interest rate world.

- The latest discussions around a Paramount-Warner merger point to consolidation as a necessary and inevitable outcome within the market.

- Disney continued to lose money in streaming, as their cash cow linear TV businesses succumbed to the structural ongoing cable cutting, and declining affiliate fees.

- The question of whether advertising (and not just subscription) is the right business model came back into focus – with Disney and Netflix raising prices and arguably trying to force churn down to their advertising tiers. Advertising remains one of the best ways of getting to scale as a streaming business and then driving leverage. To the extent that everyone is going down the route of acquiring sports content also supports the move towards advertising – both go hand in hand.

- And so, bundling Disney+/Hulu and ESPN+ might take us full circle back to what made cable so attractive in the first place! (with everyone being forced to pay for sports content whether they like it or not).

- It means that what the broader streaming and content industry will look like in 1-2 years time is still very uncertain

- Netflix definitely showed the most compelling cash flow and profitability profile this year, and its disclosures around viewership and success in licensing content positioned itself as the most likely potential winner takes all of the industry: “Suits” and the success Netflix has had in generating a “new hit” from an effectively fully depreciated show is a very effective way to persuade content owners to licence their content to Netflix. In a world where content owners like Disney, Paramount, Universal and Comcast are struggling to get to profitability and scale in streaming – are they forced into a corner where the most obvious thing to do is to licence content to their biggest competitor?

Portfolio view: We’ve been sceptical around the long term returns in streaming – the zero-interest rate environment of the last several years led to an explosion of new streaming services and sky-high spending on content that was unsustainable.

Our only real exposure to streaming is through Sony, who is the perfect non-streaming streaming player, benefitting from the content inflation in its Pictures business without having to run a cash flow negative streaming platform!

We continue to carefully consider whether Netflix might be reaching an inflection point in its returns profile. The bull case is certainly compelling – with them as the single powerful buyer in a world where all the content owners turn into arms dealers (and – perhaps ultimately – shut down their own returns diluting streaming businesses).

Regulation and big tech – increasing scrutiny

- Tech has continued a rather fraught battle with regulators across the world in 2023.

- Starting with Microsoft/Activision, after some quite frustrating regulatory responses (which seemed to want to kill the gaming cloud/streaming market before it even got started), the deal was passed but with quite significant concessions. Microsoft will give Ubisoft the cloud streaming rights for all of Activision Blizzard’s existing PC and console games and new games released during the next 15 years as part of Microsoft’s efforts to placate the UK and US regulators

- We think we’re still a long way from streaming having a meaningful impact on the gaming industry but there are lots of reasons to think the industry will move there, and indeed lots of reasons it’s good for consumers (which is why the regulatory view was such a head-scratcher).

- Google had its fair share of time in court, battling both DoJ for monopolising search and advertising (including scrutinising its payments to Apple for its default search position) and then being sued by Epic Games.

- We won’t get the DoJ/Antitrust verdict for some time, but Google lost its battle with Epic. The details of the case are pretty damning for Google – The core came down to Google making some very large and very opaque deals with games developers (paying them off to not launch on competitor game stores) and OEMs (paying them off to stop shipping with alternative stores) in order to effectively maintain monopoly power.

- The judge will now come up with remedies and Google will appeal – so no big changes in the short term. However, it isn’t insignificant – it’s the first antitrust loss for any of the big tech players.

- Finally, Adobe/Figma reached an impasse last week, with Adobe agreeing to pay Figma the $1m break up fee with “no clear path” to getting the regulatory approvals through.

- Our perspective is that the regulatory view on this deal – in particular the UK CMA’s view which was that the deal “could eliminate competition in product design software, reduce development of new products and remove Figma as a competitor” was probably correct. Adobe was willing to pay a very high price (the final deal price was ~$26bn, over 50x sales) for Figma, not because Figma is a direct Adobe competitor but because they risked competitor products (to Photoshop, or Illustrator) being built on accessed via Figma’s web platform.

Portfolio view: There are much broader implications for a more active big tech regulator on the whole tech ecosystem. Big tech acquisitions are not all the same and are not all bad – indeed it’s been a large part of investor returns for the Silicon Valley ecosystem as a whole. The reality is that the regulator has historically had little impact on the monopoly power of big tech – and while it is arguably being more active recently – we’re not sure that will change.

We sold Adobe, as we continue to question whether it is losing mind share in the increasingly important collaboration space, which its willingness to buy Figma at the eye watering valuation it bid, spoke to.

Gaming remaining title-driven

- After the COVID 2022 hangover, the gaming industry continues to be in rather a tough spot – showing only marginal growth in 2023, with spend continuing to consolidate around the bigger titles and franchises

- Take Two’s GTA 6 has almost the hopes of the industry pinned on it, but with the release now slated for 2025, the risk is an air pocket of demand in 2024, though we’ll still get the annual releases from the largest publishers like EA (EA Sports FC – the “new” Fifa) and Activision/Microsoft (Call of Duty)

- Hardware-wise, the lack of big game launches won’t help (software remains the key driver of hardware cycles in gaming), but Nintendo will likely launch its new console next year, and we expect Sony might continue its discounting around PS5 (and its mid-gen refresh with its PS5 Pro console).

Portfolio view: We own Sony, Nintendo and Microsoft (which has now completed its acquisition of Activision). While we think we’re now largely through the gaming COVID market hangover, we still focus our exposure on the big franchise title gaming businesses, which we believe have the highest returns on capital given their ability to continue to iterate and rerelease strong IP.

Macro – interest rates, inflation and fed decisions to remain in focus

- No doubt the macro, interest rates, inflation and fed decisions will continue to be a big factor for tech in 2024.

- There’s no getting away from tech being broadly correlated to rates. As a reminder though, on rising interest rates and “cash burn” as it relates to our portfolio – the companies that are really exposed to interest rate rises are those with leveraged balance sheets. Really the tech sector as a whole – and certainly the companies we own – don’t have leverage – they’re cash rich and cash generative. Most of our portfolio are either paying a dividend or have buyback programs. And to be clear, any volatility we’ve seen as a result of rates rhetoric this year is around share prices and not the fundamental earnings and returns of the businesses we own – and that can certainly create opportunities for us as long-term investors.

- There is some impact to tech through discount rates and the resulting multiple and we’ve seen that already deflate the tech sector multiple. The most impacted within tech should be the stocks with more of their value in the terminal – typically the very high growth, highly rated parts of the sector – which are (just mathematically) more sensitive.

Portfolio view: Our investment philosophy as a team is to focus on companies’ financial productivity and their ability to sustain that over a long period of time given competitive advantages. This in turn leads to a focus on cash flows and return on invested capital of the businesses we own, and their ability to compound those (through reinvestment) over time. That focus typically leads us away from the more interest-rate sensitive parts of the sector. We believe large caps will generally have a better time in a potentially weaker economy, additionally with a better ability to capture upside in the short-mid term from AI. On market levels and valuation, the businesses we own in the portfolio are still fundamentally compounding earnings – Nasdaq is back where it was at the end of 2021, but for most of the businesses we own, earnings have compounded for 2 years since then – it makes us comfortable with the price levels and multiples of the stocks we own.

For enquiries, please contact:

Inge Heydorn, Partner, at inge.heydorn@gpbullhound.com

Jenny Hardy, Portfolio Manager, at jenny.hardy@gpbullhound.com

Nejla-Selma Salkovic, Analyst, at nejla-selma.salkovic@gpbullhound.com

About GP Bullhound

GP Bullhound is a leading technology advisory and investment firm, providing transaction advice and capital to the world’s best entrepreneurs and founders. Founded in 1999 in London and Menlo Park, the firm today has 14 offices spanning Europe, the US and Asia.